Sharpening operational focus and squeezing more efficiencies out of production assets—these are just two objectives that have COOs and operations managers turning to new technologies. One of the best of these technologies is predictive analytics. Predictive analytics isn’t new, but a growing number of companies are using it in predictive maintenance, quality control, demand forecasting, and other manufacturing functions to deliver efficiencies and make improvements in real time. So what is it?

Sharpening operational focus and squeezing more efficiencies out of production assets—these are just two objectives that have COOs and operations managers turning to new technologies. One of the best of these technologies is predictive analytics. Predictive analytics isn’t new, but a growing number of companies are using it in predictive maintenance, quality control, demand forecasting, and other manufacturing functions to deliver efficiencies and make improvements in real time. So what is it?

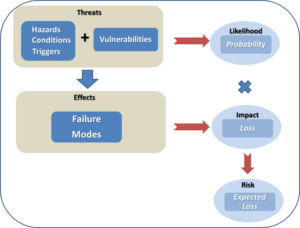

Predictive analytics is a blend of mathematics and technology learning from experience (the data companies are already collecting) to predict a future behavior or outcome within an acceptable level of reliability.

Predictive analytics can play a substantial role in redefining your operations. Today, let’s explore three additional cases of predictive analytics in action:

- Predictive maintenance

- Smart grids

- Manufacturing

Predictive Maintenance

Predictive maintenance assesses equipment condition on a continuous basis and determines if and when maintenance should be performed. Instead of relying on routine or time-based scheduling, like having your oil changed every 3,000 miles, it promises to save money by calling for maintenance only when needed or to avoid imminent equipment failure.

While equipment is in use, sensors measure vibrations, temperature, high-frequency sound, air pressure, and more. In the case of predictive maintenance, predictive models allow you to make sense of the streaming data and score it on the likelihood of failure occurring. Coupled with in-memory technologies, it can detect machine failures hours in advance of it occurring and avoid unplanned downtime by scheduling maintenance sooner than planned.

This all means less downtime, decreased time to resolution, and optimal longevity and performance for equipment operators. For manufacturers, predictive maintenance can streamline inventory of spare parts and the ongoing monitoring services can become a source of new revenue. And as predictive maintenance becomes part of the equipment, it also has the potential to become a competitive advantage.

Smart Grids

Sensors and predictive analytics are also changing the way utilities manage highly distributed assets like electrical grids. From reliance on unconventional energy sources like solar and wind to the introduction of electric cars, the energy landscape is evolving. One of the biggest challenges facing energy companies today is keeping up with these rapid changes.

Smart grids emerge when sensor data is combined with other data sources such as temperature, humidity, and consumption forecasts at the meter level to predict demand and load. For example, combined with powerful in-memory technologies, predictive analytics can be used by electricity providers to improve load forecasting. That leads to frequent, less expensive adjustments that optimize the grid and maintain delivery of consistent and dependable power.

As more houses are equipped with smart meters, data scientists using predictive analytics can build advanced models and apply forecasting to groups of customers with similar load profiles. They can also present those customers with some ideas to reduce their energy bill.

Manufacturing

The manufacturing industry continues its relentless drive for customization and “Lot sizes of 1” with innovations such as the connected factory, the Internet of Things, next shoring, and 3D printing. It’s also hard at work making sure it extracts the maximum productivity from existing facilities, which traditionally has been accomplished by using automation and IT resources. According to Aberdeen, the need to reduce the cost of manufacturing operations is now the top reason companies seek more insight from data.

Quality control has always been an area where statistical methods have played a key role in whether to accept or reject a lot. Now manufacturers are expanding predictive analytics to the testing phase as well. For example, tests on components like high-end car engines can be stopped long before the end of the actual procedure thanks to predictive analytics. By analyzing test data from the component’s ongoing testing against the data from other engines, engineers can identify potential issues faster. That in turn, maximizes the capacity available for testing and reduces unproductive time. That is only one of the many applications manufacturers find for predictive analytics.

Innovations on the Shop Floor

Predictive analytics provides an excellent opportunity for COOs and operations managers to extract additional value from production assets. It can also be an opportunity to create critical differentiators in the way products are created and delivered to customers—by providing it as a paid service (predictive maintenance) or as insight (predicting future electricity consumption).

However a company chooses to use it, predictive analytics can be the key to beating the competition.

Discover and Follow

And join the predictive conversation by following me on Twitter @pileroux.